Monday, September 24, 2007

Federated Search

Andy spoke persuasively about the need to rationally assess what we are attempting to accomplish with our available resources. His main points were to identify the largest target group who’s needs must be addressed. Address those needs using as small a resource as possible and be clever about this – don’t reinvent stuff as it is likely that there is something out there that will work for you…

He cited using Google Coop as a federated seach engine which could well meet most needs in this area... (also see a Proof of concept for Google federating here...)

If you satisfy this group you will properly have met the needs of many others as well. This strategy will hopefully leave you with some capacity to target strategically important areas...

Tuesday, August 21, 2007

Virtual Machines Windows Services Modifier

By default VMware services are started automatically when the PC starts up. (There are 5 of these services) This is fine if the virtual machine(s) are in use, but if we only want the virtual clients to be run manually on demand, then we would of course like to have all the host pc RAM available for normal use when the virtual machines aren’t running!

My answer to this situation is in two parts:

Change the way the VMware services are started by using the Management Console to change them from Automatic to a Manual startup. This means the services are present but not running.

I then run a very short Powershell script to start the services and once they are started open the VMware server application. Yes it takes about 20 seconds longer to start the VMware application this way but it works! I have a second script that will shut the VMware services down again if you want to reclaim RAM after using the virtual machines…

Thursday, July 26, 2007

Randomly Select Data From A List

I was asked recently to provide a process that could select patron data from a list randomly. We wanted to select a random portion (20%) of our patrons for a LibQUAL survey. The process needed to be able to randomly select a row of data (a record) for a number of times, determined by the user. In this way it would be possible to randomly select a list of names from the original list...

My solution was to write a Powershell script. The script expects to find a text file called "Possible.txt" in the same directory as the script. The script is run with one argument that is the number of lines to be randomly selected. Once run the script creates a second file called "Selection.txt" which will contain the selected lines of data in the order they were selected.

Links:

Sunday, June 3, 2007

Centralised Administration and Client Update Script

Our reason for developing this script was to modularise an existing script that was just becoming huge and difficult to maintain. Now we have separate small scripts focusing on discrete problems which are much better for troubleshooting. Also if we want to target specific workstations we just create a new directory put the target IP's or machine names into a .dat file in the directory and copy in the script(s) desired...

This area of support can cost a lot of consultant time what with customising and implementing services that are not included in original images or supported by the WSUS update system. Also scheduling maintenance tasks to run at regular intervals and the copying in of and/or installation of files and shortcuts for new or updated applications can be a labour intensive exercise if these have to be done manually and maintaining consistency between machines can be very difficult.

Links:

Friday, May 11, 2007

Clicker Application

Yet clickers are another physical item for users to carry, they have a cost and if they are not available when needed their value diminishes. I developed this .net application to run in a "computer lab" environment as a replacement for a physical clicker. The advantage for the user is that they don't have to buy it and or remember to bring it to a session.

The clicker software consists of 2 applications. One runs on a "master" computer and the other runs on any clients that are attached to the same network. A third element is required for these two applications to interact and that is a MySQL database. The database is a simple two table database which stores the client responses to the questions. Once the users have responded, the "Master" application then retrieves the data from the database and displays it in a window which can be projected for all to see.

I have the Clicker working in 4 "lab" environments running on XP work stations. I have also tested the Clicker on an "upgraded" Vista operating system. All seems to work as expected! I will test it further on a "clean" installation and update this comment accordingly with the results.

Links:

Monday, April 16, 2007

Carnival of the infosciences #69

Thanks to all those who took the time to submit articles and blog posts for consideration, It was much appreciated.

I have decided to divide this carnival into three sections, the fun, the geeky and the Strictly Library.

The Fun

As if web 2.0, library 2.0, and opac 2.0 weren't enough, we now have 'supermarket 2.0'!Thanks: @ the Library

Strictly Library

The Google's Librarian Central points us towards an article in Searcher Magazine explaining the Google book search digitization project.

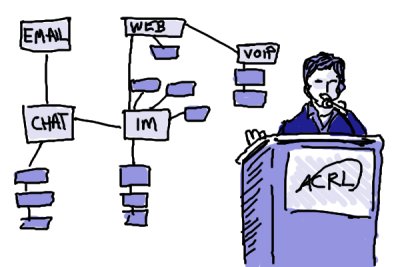

Derik Badman presents his Comments and drawings from the ACRL Conference.

Also from the ACRL Conference, Jenny Levine writes about the latest experiments in IM reference services.

Connie Crosby let me know about Ellyssa Kroski's blogpost 'Information Design for the New Web', saying, "Ellyssa Kroski at Infotangle has put together an amazing outline of good, current web design with examples of existing websites. This is for her presentation at Computers in Libraries on Monday, and she has shared it all in a blog post. Outstanding!"

Here are the issues his post mentions:

- The Changing Nature of Publishing

- The Changing Nature of Metadata

- The Changing Nature of customers

- What's worth paying for?

The Geeky

Code4lib have published the videos and podcasts from the code4lib2007 conference.Daniel Chudnov points us at his article about standards for computers in libraries.

Well that's this edition of the carnival of the infosciences, please submit you suggestions to the next carnival host using the

carnival submission form.

The next host for this carnival hasn't yet been announced...

Tuesday, April 3, 2007

TimeKeeper Application

The TimeKeeper application was built to address these situations by providing a countdown timer to limit the usage of the equipment without staff intervention. The original application was built using VB6 but I have rewritten it using VB .NET and it is now way more robust. In its current form it has been in use for over a year in the Windows XP environment and its presence is taken for granted by both our users and staff. In fact the use of this application has assisted greatly in improving our staff user relationships as Library staff now do a lot less "policing" of the workstations.

When building an application which is acting as a "policemen" you have to ensure that the users are informed about what it will do! This is accomplished by several screens which come to the front and have to be acknowledged by the user before they can continue to use the workstation. The first screen at Logon tells the user what is happening and starts a countdown timer when the timer gets near to the end of the session at least 2 warnings are presented to the user (which they have to acknowledge) before the session is closed by the TimeKeeper.

The TimeKeeper application requires that the user cannot access the workstation "Task Manger" interface. This is a setting in Group Policy that would normally be denied users in a managed network environment in any case. (The reason in this instance for doing this is that if the user can stop the TimeKeeper process in the Task Manager then the application is negated.) Assuming that this condition is met then the TimeKeeper is robust and reliable.

I have tested TimeKeeper on an "upgraded" Vista operating system. All seems to work as expected! I will test further on a "clean" installation and I will amend this page accordingly.

Links:

Monday, April 2, 2007

A Carnival comes to LibraryCogs

LibraryCogs has the privillege of hosting the next 'Carnival of the Infosciences'.

The way a blog carnival works is that a collection of blogs on similar topics take turns at hosting the carnival, the host gets sent articles and blog posts from around the web and assembles the links and adds his or her own thoughts on each one. The Carnival of the Infosciences links to articles relating to the library and information sciences field.

So start sending in your suggestions now, The Carnival is here April 16th.

Thursday, March 22, 2007

Voyager and Blackwell

1. MOUR (Mini Open Url Redirector)

Collection Manager has a user based preference to setup an open url resolver the idea is that you point this at your open url server and it will let you know if a book is already in your catalogue. Unfortunately our openurl resolver doesn't link into our library catalogue properly for books, and users would have to click through two links to even see the results. The Mini Open Url Redirector simply redirects the browser to the library catalogue search for the book concerned, no need to go through any intermediate screens.

2. Currency Split

Collection Manger exports orders to an ftp server which can be downloaded and imported into voyager. Unfortunately voyager can only handle orders which are all using the same currency. This script takes the file produced by collection manager and splits it into a bunch of files, one for each currency. These files can then be imported separately into voyager.

Surveying Users

The features of PHPSurveyor that really attract me are:

This product is worth the trouble of implementing although users will need to have some support to initially get it to work.

Friday, March 16, 2007

PERL Data File Search Script

This PERL script is designed to locate all the HTML files in a single directory. These files are in fact named for the hostname of the workstations that originally generated them when they were created by the Powershell - Get Inventory Script, so the script either searches for computer names and displays the complete data sets for each computer on one page, or it searches each file to locate the data string requested and displays the results. Which type of search is in fact done, is dependant on the users choice in the original search (Hostname or Keyword). If a Keyword search is selected any HTML tags in the source code are removed, before comparing the remaining text data with the string being sort. This reduces the number of false hits!

By searching all the data files harvested (copied) from the workstations there is no need to set up a database to store the data and the interaction overhead this causes. Because we are seaching ALL the files content we are able to effectively isolate discrete data quickly. For example: If I am asked for the number of installations of EndNote we have. I can set up a Keyword search for EndNote the returning data will advise me of the number of workstations with Endnote (124 as of writing) and then list for me the names of the workstations followed by the version details of EndNote on each workstation.

Links:

Sunday, March 4, 2007

A Spellchecker for Webvoyage

As promised I have put all the code and some limited documentation on the web. Naturally I'm not going for a Pulitzer Prize in writing, or aiming to make the documentation absolutely complete, but if you do have some input you want to make into the webpages, documentation, the code, or just want to discuss the ideas involved. Please feel from to contact me. My email address is j.brunskill AT waikato.ac.nz

Links:

Combining Datafiles

The zip file download of NGCombine.exe is mounted on my personal website. The downloaded zipfile will need to be opened and NGCombine.exe can be copied into the system directory of your workstation or to a directory of your choosing. If you load the file into the system directory you will not need to use a full filepath to call it.

NGCombine.exe has been limited to processing 1,000,000 records (that is lines of text) per file, which I think is plenty for most of us! Details of the call syntax follows:

NGCombine.exe

Combines the contents of two text files sorting the data and eliminating empty lines. (If applied to a single file, the file is sorted.)

Syntax NGCombine [/a [X:\...]] [/n [X:\...]] [/o [X:\...]] [/e]

Parameters

/a [X:\...]

Required: Specifies filepath for the file containing original or "Archive" data.

/n [X:\...]

Specifies filepath for the file containing "New" or incoming data. If this file is not specified, a data sort will occur on the original or "Archive" data only.

/o [X:\...]

Specifies filepath for the output data. If this file is not specified, the default output filepath is the original or "Archive" data filepath.

/e

Eliminates any duplicate lines of data.

/r

Reverses the sort order.

/?

Displays help at the command prompt.

Tuesday, February 27, 2007

Compare your Library with LibraryThing

Tim writes:

Over on Next Generation Catalogs for Libraries, NCSU's Emily Lynema, asked me:So I decided to see how hard it would be to write a script to compare the LibraryThing dataset against a simple export from our library system. It turns out it didn't take to long. And I have posted the perl source code on my personal website so you no longer have that as an excuse for not helping Tim out."Do you have any idea of the coverage of non-fiction, research materials in LT? Have you done any projects to look at overlap with a research institution (or with WorldCat)?"No, we haven't. And I'm dying to find out, both for academic and non-academic libraries.

Here are the stats for The University of Waikato Library:

Out of approximately 500,000 Bib records in our database I found only about 178,460 unique ISBNs. LibraryThing has 1,774,322 ISBNs so they have ten times as many as us! Note: This was found to be an error during normalisation. The number is now 292,073

UoW Library and LibraryThing have

| Database | Total ISBNs | Unique ISBNs | Percentage Unique |

| University of Waikato | 292,073 | 218,696 | 74.88% |

| LibraryThing | 1,774,322 | 1,700,943 | 95.86% |

Total ISBNs in common: 73,377

I figure since they asked the question, NCSU Libraries should be next...

External Links:

Update: Updated Figures after discovering I had dropped a whole bunch of ISBN's when normalising them.

Wednesday, February 21, 2007

Citizen Preservation, A vision for the future.

We were privileged to have the NZ National Librarian (Penny Carnaby) speaking about the National Digital Heritage Archive (NDHA) as well as other projects such as the National Resource Discovery System, the concept of kete (basket of knowledge) and a whole lot more.

She talked a lot about the way the internet is evolving, web 2.0 concepts bringing content creation to the hands of every day people and how that is changing the way content needs to be archived and preserved.

This got me thinking, if web 2.0 if all about giving ordinary people the tools and resources they need to produce content, shouldn't we also begin to put the tools and resources in people hands to preserve and describe their content?

Preservation isn't exactly a foreign concept to most people, I mean people collect stamps and antique furniture, they rewrite grandma's favorite chocolate cake recipe in a new book so that is wouldn't get lost. We all like to hold on to family heirlooms and all manor of odds and ends. So is there a place for a national library, or in that sense anyone to make tools and resources available to everyday people and set them loose to protect and preserve their content, history, and the like?

I asked that question (Slightly more succinctly I might add) of Penny Carnaby, and I love her response. Note: This is stated as I remember it, not even slightly 'word for word'.

"Imagine this picture, A elderly man walks into the national library, his grandson reaching up to hold his hand. Under their arms are books filled with old New Zealand and international stamps collected over the decades. Together the two sit down at computer and begin to scan in and annotate the collection, making it available to the world."

It is such a nice picture isn't it? The people who care about the data, the people who have the data, are able to release that data so that others have access to it. I don't think from any stretch of the imagination that the national library will undertake to build such a system, but it is a vision of what the future maybe like.

I don't know about you, but I'd love to see it happen!

Monday, February 19, 2007

Automated Equipment Inventory

The script outputs the data it retrieves to a HTML file located on the C:\ drive of the workstation that it is run on. We leave a copy of the output file on the C:\ drive so we can open it if we navigate to the C$ share over the network. We also copy the output file onto a server which allows us to browse or search the files using another script.... Typically the output will look like the output from my personal computer. I really like the way this script gets details like the processor ID number, the MAC address and the serial numbers off the BIOS. It makes the output file almost like a "finger print" of the machine. (Which is great news if a laptop goes missing and the police need the details AND you have a copy of the file on a server!)

The script is available together with more details about it here. We used Windows Powershell to solve this problem because it is a new product (at the start of it's life cycle) and is compatible with the current and upcoming Microsoft operating system releases.

If you are using Windows XP you will need to load Microsoft Windows Powershell, on the workstations on which you want to run the script. You will also need to set the Powershell script execution policy to: "set-executionpolicy remotesigned". The prerequisites for Powershell on XP are Service Pack 2 and the Microsoft .NET Framework version 2.0

12-06-2007 Added a new section that identifies all the usernames that have a profile on the workstation....

14/07/2007 Inserted new section to display Norton/Symantec AntiVirus Status if the application is present...

Sunday, February 18, 2007

Welcome

We hope to be adding some more content here soon.